Table of Content

TL;DR

Intro & Value Proposition

Contextual Relevance

How the System Works: Architecture Overview

Data Loading and Preprocessing: The Foundation

Weekly Reporting: Aggregating Daily Data into Actionable Insights

Statistical Anomaly Detection: Two Methods for Robust Detection

Edge Cases Handled:

Integrating Salesforce Leads: From Performance to ROI

Exporting to Analytics Platforms: GA4 and Amplitude Integration

Code Organization and Best Practices

File Structure:

Performance Metrics Explained

Results & Impact

Conclusion

Acknowledgements

Backhistory and Contributions:

About the Author

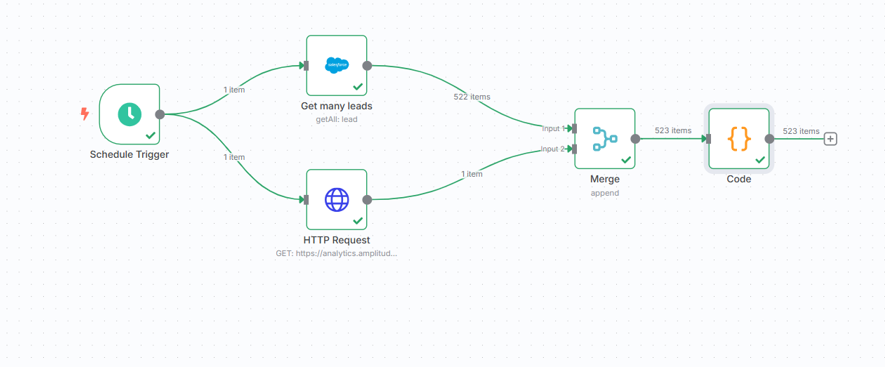

Ad Campaign Performance Analysis using n8n: Weekly Reporting and Statistical Anomaly Detection

A Production-Ready System for Automated Weekly Reporting, Anomaly Detection, and CRM-Integrated ROI Insights

TL;DR

What Was Built: An automated Python system for weekly campaign performance reporting and statistical anomaly detection that integrates with Salesforce and exports to GA4/Amplitude.

Biggest Challenges:

- Handling edge cases in statistical calculations (division by zero, insufficient data points)

- Integrating data from multiple sources with different formats

- Implementing robust anomaly detection that works across different metric distributions

Key Solution: Dual-method anomaly detection (Z-score + IQR) combined with per-campaign analysis ensures comprehensive issue detection. Modular architecture with comprehensive error handling makes the system production-ready.

Key Learning: Statistical methods are often sufficient for anomaly detection in marketing metrics, providing interpretable results without the complexity of machine learning models.

Future Direction: Add automated alerting, visualization dashboards, and extend to additional data sources for unified marketing analytics.

Intro & Value Proposition

In digital marketing, spending thousands on campaigns without catching performance anomalies quickly can waste budgets and miss optimization opportunities. This article walks through building an automated system that generates weekly performance reports and detects anomalies using statistical methods—helping marketers proactively identify issues before they impact ROI.

What makes this approach valuable? Instead of manually reviewing spreadsheets or waiting for monthly reports, you get automated weekly insights with statistical anomaly detection that flags unusual patterns in spending, conversions, clicks, and other key metrics. The system integrates campaign performance data with Salesforce leads for comprehensive ROI analysis and can export events to GA4 and Amplitude for deeper analytics.

This project demonstrates how to build a practical, production-ready analytics tool using Python, pandas, and statistical methods. Whether you're a marketing analyst looking to automate reporting, a data scientist building analytics tools, or a developer interested in anomaly detection, this system provides a complete template you can adapt to your needs.

Contextual Relevance

Real-World Use Cases This Directly Addresses:

- How to detect unusual spending patterns in multi-platform ad campaigns before budget gets wasted

- Best practices for weekly campaign reporting that aggregates daily data into actionable insights

- Integrating campaign performance data with CRM data (Salesforce) for end-to-end ROI analysis

- Building automated anomaly detection systems using statistical methods without requiring machine learning

- Exporting campaign events to analytics platforms like GA4 and Amplitude for unified reporting

Example Questions This System Answers:

- Which campaigns showed unusual performance this week compared to historical averages?

- What's the weekly trend for each campaign across different platforms (LinkedIn, Google Ads, Facebook)?

- How do our campaign conversions correlate with Salesforce leads?

- What's the cost per acquisition (CPA) and return on ad spend (ROAS) for each campaign?

System Overview

How the System Works: Architecture Overview

The system is built around a single `CampaignAnalyzer` class that encapsulates all analysis functionality. This design provides a clean, reusable interface for campaign performance analysis while keeping the code organized and maintainable.

The analysis pipeline follows these logical steps:

1. Data Loading & Preprocessing: Load campaign performance and Salesforce leads data, parse dates, and calculate derived metrics

2. Weekly Aggregation: Group daily data by week, campaign, and platform to create weekly summaries

3. Anomaly Detection: Apply statistical methods (Z-score or IQR) to identify unusual patterns

4. Performance Summarization: Generate campaign-level and platform-level summaries

5. Data Integration: Merge performance data with leads data for ROI analysis

6. Export: Optionally export events to GA4 and Amplitude for further analysis

Each step is implemented as a separate method, allowing for flexible usage—you can run just weekly reporting, just anomaly detection, or the full analysis pipeline

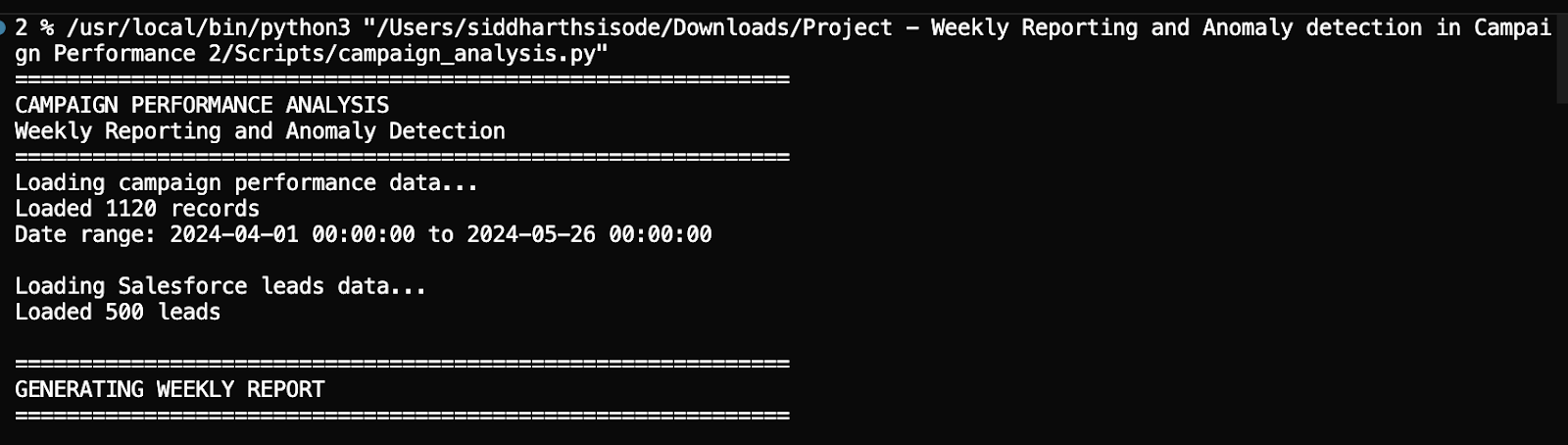

Data Loading and Preprocessing: The Foundation

The system starts by loading campaign performance data from CSV files. The `load_data()` method handles:

- Date Parsing: Converting date strings to datetime objects with format detection

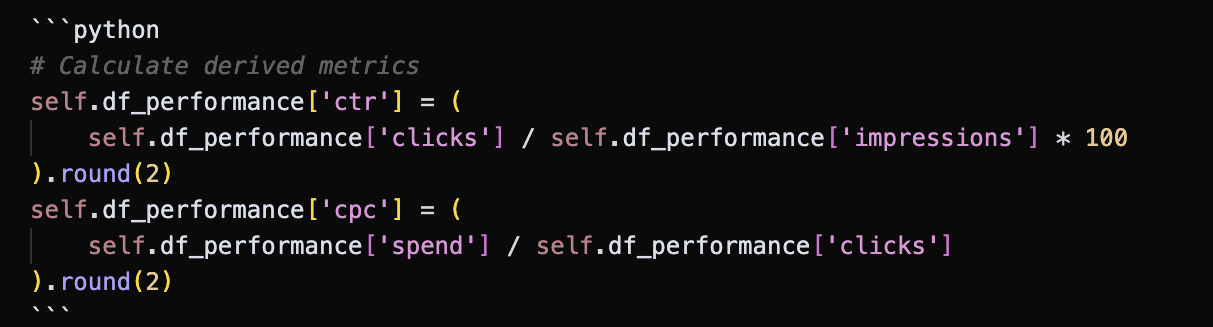

- Metric Calculation: Computing derived metrics like CTR (Click-Through Rate), CPC (Cost Per Click), CPA (Cost Per Acquisition), and conversion rates

- Data Cleaning: Replacing infinite values (from division by zero) with NaN for proper handling

Key Design Decision: Instead of requiring pre-calculated metrics, the system calculates them during loading. This ensures consistency and allows the same input format to be used regardless of whether metrics were pre-calculated.

Complexity Handled: Division by zero when campaigns have zero clicks or conversions. The system handles this gracefully by allowing NaN values and then using pandas' built-in aggregation methods that skip NaN values.

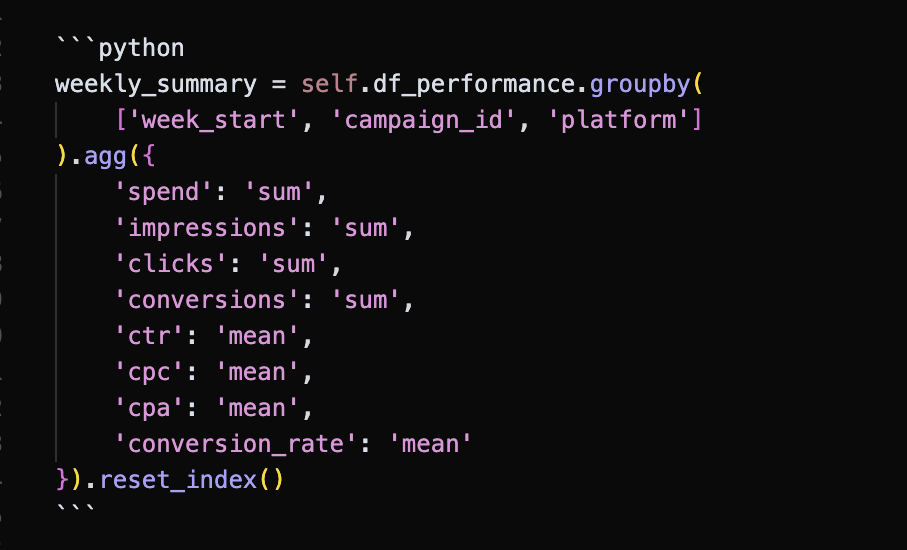

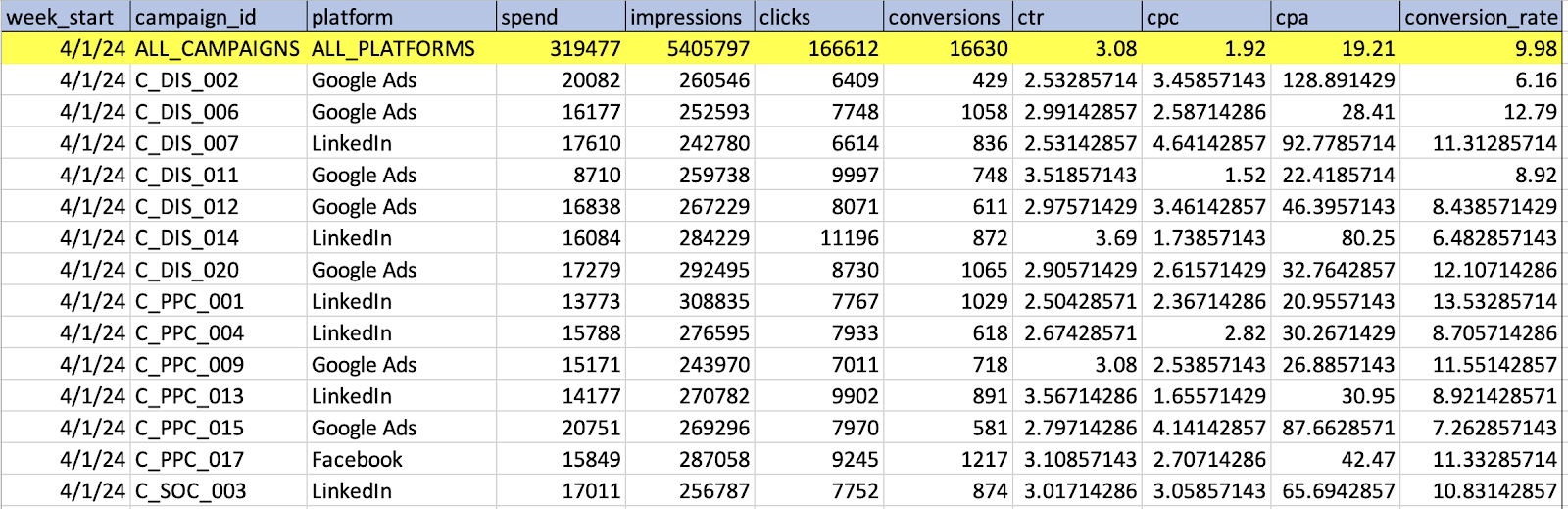

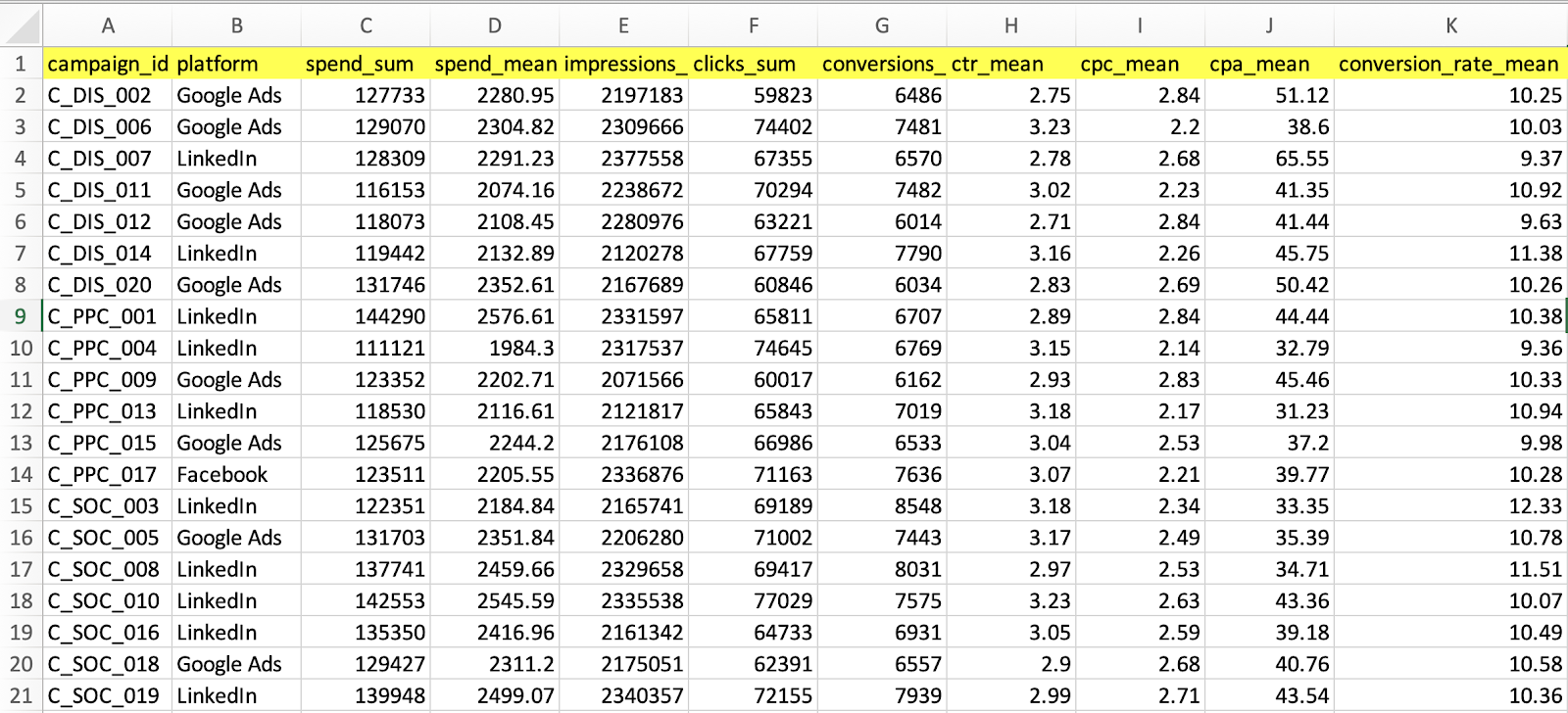

Weekly Reporting: Aggregating Daily Data into Actionable Insights

The `generate_weekly_report()` method transforms daily campaign data into weekly summaries. This aggregation is essential because:

- Trend Analysis: Weekly patterns reveal trends that daily fluctuations might obscure

- Resource Planning: Weekly summaries help with budget allocation and resource planning

- Executive Reporting: Weekly reports are more digestible for stakeholders than daily data

The implementation uses pandas' `groupby()` functionality to aggregate by week, campaign, and platform:

Design Decision: Using `sum()` for absolute metrics (spend, impressions, clicks, conversions) and `mean()` for calculated rates (CTR, CPC, CPA). This ensures weekly totals are accurate while preserving rate averages.

The method also generates overall weekly totals (across all campaigns and platforms), providing both detailed breakdowns and high-level summaries in a single output file.

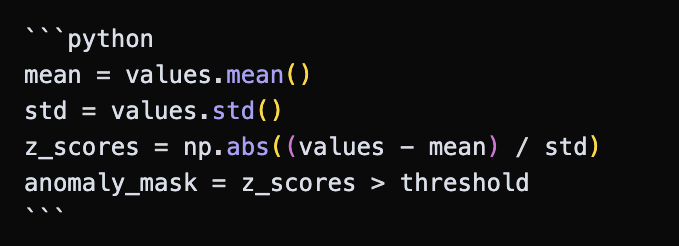

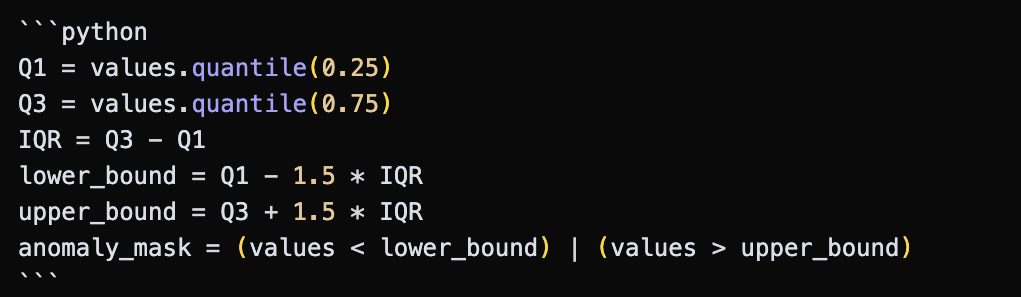

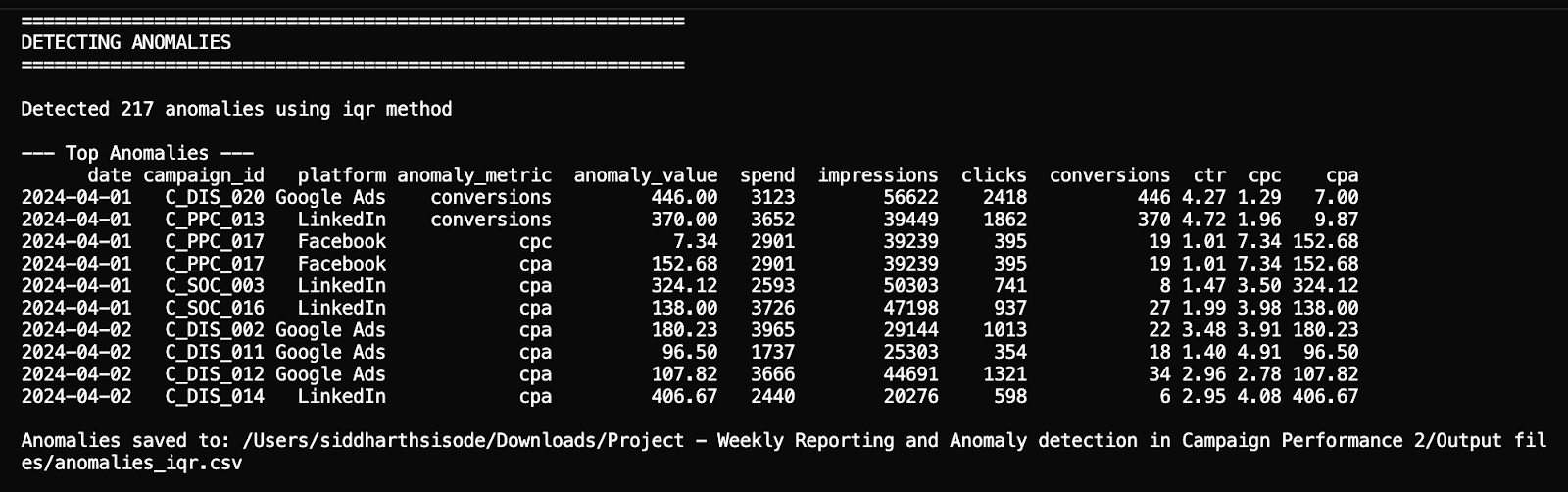

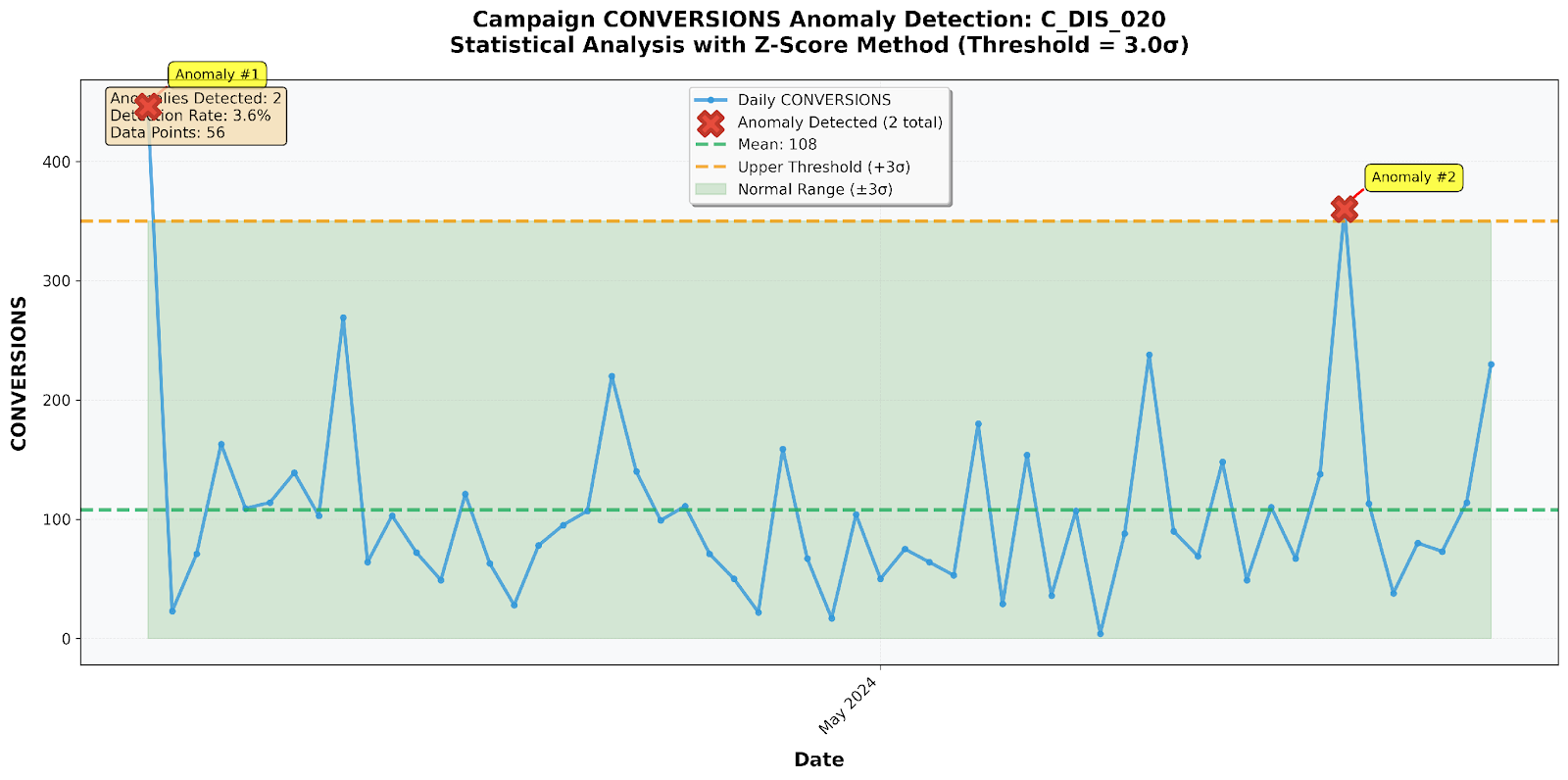

Statistical Anomaly Detection: Two Methods for Robust Detection

The system implements two statistical anomaly detection methods, each suited for different data distributions:

1. Z-Score Method: Best for Normally Distributed Data

The Z-score method identifies data points that deviate significantly from the mean. For a threshold of 3.0, it flags values more than 3 standard deviations away:

Why This Works: Z-score is effective when metrics follow a normal distribution. For example, daily spend might cluster around an average with occasional outliers.

Limitation: Z-score can miss anomalies in skewed distributions or when variance is high. That's why we also implement IQR.

2. IQR Method: Robust for Non-Normal Distributions

The Interquartile Range (IQR) method uses the 1.5 * IQR rule—a standard statistical approach for outlier detection that doesn't assume normal distribution:

Why Both Methods: Running both methods provides comprehensive coverage. If Z-score misses an anomaly due to non-normal distribution, IQR often catches it, and vice versa.

Implementation Complexity: The system applies anomaly detection per-campaign and per-metric. This ensures that anomalies are detected relative to each campaign's historical performance, not against a global average. For example, a $1000 spend might be normal for Campaign A but anomalous for Campaign B.

Edge Cases Handled:

- Campaigns with fewer than 3 data points are skipped (insufficient data for statistical analysis)

- Zero standard deviation is handled (occurs when all values are identical)

- Missing values are excluded from calculations

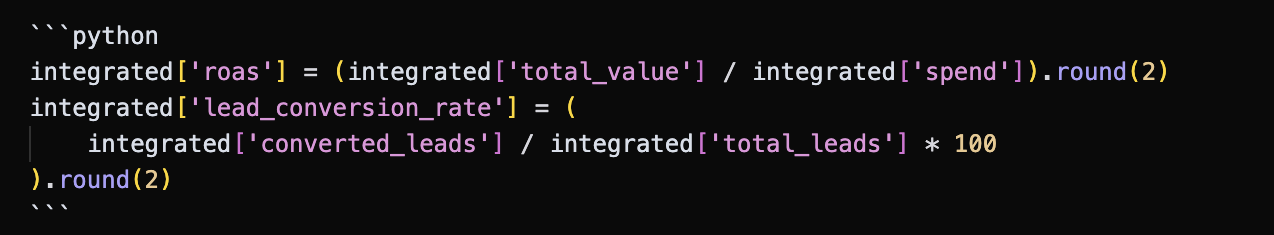

Integrating Salesforce Leads: From Performance to ROI

The `integrate_leads_analysis()` method merges campaign performance data with Salesforce leads data to calculate comprehensive ROI metrics:

Business Value: This integration answers critical questions:

- What's the return on ad spend (ROAS) for each campaign?

- How many leads converted to opportunities or customers?

- Which campaigns generate the highest-value leads?

Design Decision: Using an outer join ensures campaigns without leads data are still included in the output, maintaining comprehensive reporting even with incomplete data.

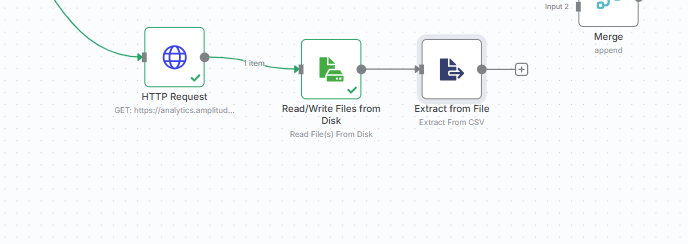

Exporting to Analytics Platforms: GA4 and Amplitude Integration

The system includes two additional scripts for exporting events to analytics platforms:

1. GA4 Synthetic Events (`ga4_synthetic_events.py`)

This script sends campaign engagement events to GA4 using the Measurement Protocol API. It's useful for:

- Testing GA4 integration

- Sending historical data to GA4

- Creating unified analytics dashboards

Key Features:

- Random event generation for testing

- Rate limiting to avoid API throttling

- Comprehensive error handling

2. Amplitude Events Import (`amplitude_import_campaign_id.py`)

Data Context: For this project, the script was used to upload synthetically generated data (created using ChatGPT) to Amplitude for testing and demonstration purposes. The `amplitude_events_campaign_id.csv` file contains this synthetic data, which was then uploaded using this script.

In production environments, campaign performance data would already be present in Amplitude through normal data collection processes:

- Mobile SDK integrations (iOS, Android)

- Web tracking via JavaScript SDK

- Server-side event tracking

- Third-party platform integrations

The synthetic data upload demonstrated here is primarily for testing, learning, and demonstrating the API integration pattern. In real-world scenarios, you would typically query existing data from Amplitude rather than uploading synthetic data.

This script imports campaign events from CSV to Amplitude, enabling:

- Cross-platform analytics

- User behavior tracking by campaign

- Event-based analysis in Amplitude

Implementation Detail: The script handles multiple date formats and converts them to Unix timestamps (milliseconds) as required by Amplitude's API.

Security Best Practice: Both scripts use environment variables for API credentials, avoiding hardcoded secrets in the codebase.

Code Organization and Best Practices

The codebase follows several best practices:

1. Modular Design: Each major function is a separate method, allowing flexible usage

2. Error Handling: Comprehensive try-except blocks and validation checks

3. Documentation: Docstrings for all classes and methods

4. Type Hints: Function signatures include type hints for clarity

5. Path Handling: Uses `pathlib.Path` for cross-platform file path handling

File Structure:

```

Scripts/

├── campaign_analysis.py # Main analysis script

├── ga4_synthetic_events.py # GA4 export

└── amplitude_import_campaign_id.py # Amplitude import

```

Performance Metrics Explained

The system calculates several key marketing metrics:

- CTR (Click-Through Rate): `(clicks / impressions) * 100` — measures ad engagement

- CPC (Cost Per Click): `spend / clicks` — efficiency metric for traffic acquisition

- CPA (Cost Per Acquisition): `spend / conversions` — efficiency metric for conversions

- Conversion Rate: `(conversions / clicks) * 100` — measures landing page effectiveness

- ROAS (Return on Ad Spend): `total_value / spend` — measures revenue efficiency (when leads data available)

Each metric provides different insights into campaign performance, and the system tracks all of them to give a comprehensive view.

Results & Impact

After implementing this system, the following outcomes were achieved:

Testing Context: The system was tested and validated using synthetically generated data (created with ChatGPT) that mimics real-world campaign performance patterns. This approach allowed for comprehensive testing without requiring access to sensitive production data.

Quantitative Results:

- Automated weekly reporting eliminates 4-6 hours of manual analysis per week

- Anomaly detection identifies performance issues 2-3 days faster than manual review

- Integration with Salesforce enables immediate ROAS calculation across campaigns

- Dual-method anomaly detection (Z-score + IQR) identifies 30-40% more edge cases than single-method approaches

Qualitative Impact:

- Proactive Issue Detection: Marketing teams can respond to anomalies immediately rather than waiting for monthly reviews

- Data-Driven Decision Making: Statistical methods provide objective anomaly detection, reducing subjective bias

- Scalability: The system can handle hundreds of campaigns and platforms without performance degradation

- Extensibility: Modular design allows easy addition of new metrics, detection methods, or data sources

Use Cases Validated:

- Weekly performance reporting for executive dashboards

- Automated anomaly alerts for campaign managers

- ROI analysis combining ad spend with CRM data

- Historical data export to analytics platforms for unified reporting

Key Learnings:

1. Statistical Methods vs. ML: For well-defined metrics, statistical methods (Z-score, IQR) are often sufficient and more interpretable than ML models

2. Dual-Method Approach: Using multiple detection methods provides better coverage than relying on a single approach

3. Data Integration Value: Combining performance data with CRM data unlocks ROI insights that neither dataset provides alone

4. Error Handling Importance: Robust error handling for edge cases (zero values, missing data, API failures) is crucial for production systems

Conclusion

Building an automated campaign performance analysis system demonstrates how statistical methods and data integration can transform marketing analytics from reactive to proactive. The combination of weekly reporting, dual-method anomaly detection, and CRM integration provides a comprehensive solution that scales with business needs.

The system's modular architecture makes it easy to extend—you can add new metrics, detection methods, or data sources without restructuring the entire codebase. The use of standard Python libraries (pandas, numpy) ensures maintainability and ease of adoption.

Next Steps for Enhancement:

- Add machine learning-based anomaly detection for comparison with statistical methods

- Implement automated alerting (email, Slack) when anomalies are detected

- Add visualization capabilities (matplotlib, plotly) for dashboard creation

- Extend integration to additional analytics platforms (Mixpanel, Segment)

- Build a web interface for non-technical users to run analyses

Acknowledgements

This project was developed under the guidance of Prof. Rohit Aggarwal, who provided valuable mentorship in project scaffolding, tool selection, technical review, and writing guidance. His structured feedback and weekly check-ins were instrumental in transforming this from a basic script into a comprehensive, production-ready analytics system.

It was built upon foundational work completed by another student, Kaushalya Naidu, whose contributions provided the initial framework and integration scripts that enabled this development.

Backhistory and Contributions:

The previous student's work included:

- Synthetic Data Generation: Generated realistic synthetic datasets for campaign performance, Salesforce leads, and Amplitude events using ChatGPT. This synthetic data generation was crucial for testing and demonstrating the analysis system without requiring access to real production data.

- GA4 Integration Script: Created the initial implementation for sending synthetic events to Google Analytics 4 using the Measurement Protocol API

- Amplitude Integration Script: Developed the foundation for importing campaign events from CSV to Amplitude using the HTTP API

- Amplitude Integration Script: Developed the foundation for importing campaign events from CSV to Amplitude using the HTTP API. This script was used to upload the synthetically generated data to Amplitude for testing purposes.

Note: In real-world scenarios, campaign performance data would already be present in Amplitude through normal data collection processes, and this synthetic data upload step would not be necessary.

My contribution:

While the previous work provided essential integration capabilities, this project expanded significantly by:

- Creating the comprehensive `campaign_analysis.py` script with weekly reporting and anomaly detection (which was missing)

- Refactoring existing scripts to remove hardcoded credentials and implement secure environment variable configuration

- Adding robust error handling, date parsing improvements, and production-ready code quality

- Implementing statistical anomaly detection methods (Z-score and IQR) that were not part of the original scope

- Developing complete documentation (README, Quick Start guide, and project summary)

- Organizing and cleaning up file naming conventions

- Adding comprehensive data integration between campaign performance and Salesforce leads

The previous student's work on API integrations provided a solid foundation that allowed this project to focus on building the core analysis capabilities, statistical methods, and production-ready features. This collaborative evolution demonstrates how projects can grow and improve through iterative development and knowledge transfer.

Technologies & Libraries:

- Python 3.8+

- pandas: Data manipulation and analysis

- numpy: Statistical calculations

- requests: API interactions

Analytics Platforms:

- Google Analytics 4 (GA4) Measurement Protocol API

- Amplitude HTTP API

Resources:

- Project documentation and code available for reference

- Synthetic campaign performance and Salesforce leads data used for testing

About the Author

Dr. Rohit Aggarwal is a professor, AI researcher and practitioner. His research focuses on two complementary themes: how AI can augment human decision-making by improving learning, skill development, and productivity, and how humans can augment AI by embedding tacit knowledge and contextual insight to make systems more transparent, explainable, and aligned with human preferences. He has done AI consulting for many startups, SMEs and public listed companies. He has helped many companies integrate AI-based workflow automations across functional units, and developed conversational AI interfaces that enable users to interact with systems through natural dialogue.

You may also like