Table of Content

Process Overview

Example: Google Ads Tools

Without MCP (Using Function Calling)

With MCP

Adaptation for ChatGPT Custom GPTs

Why Offer an MCP Server to Your Customers?

Limitations and Challenges of MCP

About the Author

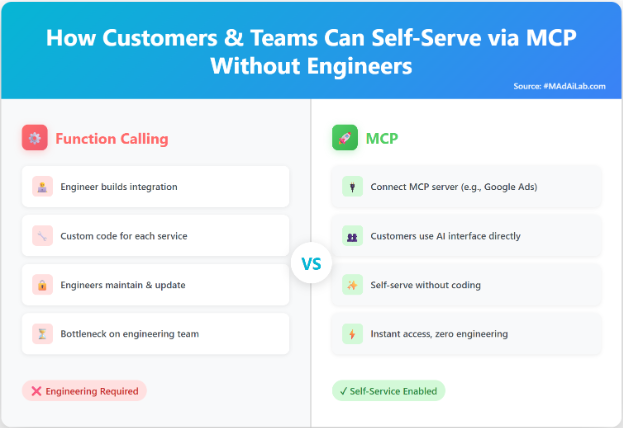

MCP vs. Function Calling: How Customers and Teams Can Self-Serve Without Engineers

Using the Google Ads MCP Server as an example, learn how MCP unlocks new ways to empower customers and internal teams beyond traditional function calling.

You Will Learn:You Will Learn:You Will Learn:

- How MCP servers (like Google Ads MCP) let customers use services directly from AI interfaces without coding.

- How internal teams can self-serve information through MCP, reducing reliance on engineers.

- The basics of Function Calling and MCP — what they are and how they work.

- The key differences between Function Calling and MCP, and what changes when customers implement tools themselves.

- The main limitations and challenges of adopting MCP in real-world systems.

Modern AI applications often need to interact with external tools, APIs, and data sources. These systems usually involve two key components:

- The LLM (Large Language Model) — the reasoning engine that interprets user input and generates structured instructions or text responses (e.g., ChatGPT-4.1, Claude Sonnet, Gemini 2.5, Qwen 3).

- The LLM Controller Layer — the software layer that connects to the LLM via API, manages conversation state, and orchestrates tool or API execution. Controllers include applications such as ChatGPT web app (chat interface), OpenWebUI, Claude Desktop, Cursor IDE, and Claude Code.

The LLM itself never executes code or calls APIs directly. It only suggests what should happen. The controller interprets those suggestions and decides how and when to carry them out.

Two complementary mechanisms enable this collaboration:

- Function Calling – the foundational mechanism where the LLM suggests structured function calls that the controller executes.

- Model Context Protocol (MCP) – an open infrastructure standard built on top of function calling that allows the LLM controller to dynamically discover and connect to tool servers.

This tutorial explains both concepts, how they relate, and when to use each.

Function Calling

Function calling allows a model to suggest a structured function call (function name and arguments), while the LLM controller executes it.

The LLM model never runs code or calls APIs. It simply proposes what function to call and with what arguments. The controller interprets those suggestions, and performs the actual execution.

Process Overview

1. Function Definition: You provide the model with a list of available functions, typically defined in JSON schema format that includes:

- Function name

- Description of what the function does

- Parameters it accepts (with types and descriptions)

- Expected return format

You provide this list as a separate structured API parameter (e.g., tools parameter in OpenAI or Anthropic APIs), and you include it in every API request. For example, you might describe a list of functions like get_weather, get_traffic_status, send_email, etc.

This is like giving the model a "menu" of capabilities—you're describing what's possible, not executing anything yet.

2. Model Analysis: When the user sends a message (e.g., "What's the weather in London?"), the model inspects the available function definitions and determines whether external data is needed.

3. Tool Call Suggestion: The model emits a structured "call" containing:

- The function name it wants to call (e.g., get_weather)

- The arguments to pass (e.g., {"location": "London, UK", "units": "celsius"})

- This is formatted according to the LLM provider's specific schema (OpenAI, Anthropic, etc.)

This is only a suggestion, not actual execution. The model is saying “I think you should call this function with these parameters.”

4. Controller Execution: Your LLM controller receives this suggestion, parses it, and runs the actual function implementation. This might involve:

- Calling external APIs (weather services, databases)

- Performing computations

- Accessing file systems or other resources

The controller is responsible for error handling, authentication, security, and returning valid results

5. Result Formatting: The controller returns the function output to the model as a message with a special role (tool or function).

6. Final Response: The model receives the tool result, incorporates it into its context, and generates a natural language response for the user: "The current weather in London is partly cloudy with a temperature of 15°C."

Model Context Protocol (MCP)

Model Context Protocol is an open standard (created by Anthropic) that defines a client–server protocol for connecting AI models to external tools and data sources in a unified, scalable way.

Core Components

- MCP Server: Hosts tools that can complete certain tasks upon called. Example: A server exposing a get_orders tool that returns a customer’s last five orders.

- MCP Client: Integrated into the LLM controller. The client:

- Connects to one or more MCP servers

- Discovers available tools dynamically

- Routes tool calls to the correct server

- Manages the communication between the model and tools

Example: Google Ads Tools

Let us consider another example but this time let us take a real meaningful example. Google recently enabled an Google Ad MCP server for marketers exposing tools such as: execute_gaql_query, get_campaign_performance, get_ad_performance.

Let’s compare what engineers would need to do with and without MCP.

Without MCP (Using Function Calling)

| Step | Action | Notes |

| 0 | Engineering team creates and configures functions in OpenWebUI | Functions: execute_gaql_query, get_campaign_performance, get_ad_performance. |

| 1 | User starts chat session | OpenWebUI loads and sends function definitions with each API request to LLM |

| 2 | User types a message in the chat interface and asks an informational question | e.g., "What is function calling?" |

| 3 | OpenWebUI sends user's message + function list to LLM API. | { "messages": [ {"role": "user", "content": "What is function calling?"} ], "tools": [ {"type": "function", "function": {"name": "execute_gaql_query", "description": "...", "parameters": {...}}}, {"type": "function", "function": {"name": "get_campaign_performance", "description": "...", "parameters": {...}}},... ] } |

| 4 | ChatGPT answers question from its internal knowledge ; no function calling needed. | ChatGPT returns response: {"role": "assistant", "content": "Function calling allows AI models..."} |

| 5 | OpenWebUI receives response from ChatGPT and displays response | OpenWebUI displays ChatGPT's response and conversation continues |

| 6 | User asks a question requiring external data | e.g., "Show campaign performance for last month." |

| 7 | OpenWebUI sends updated conversation with same tools. | {"messages": [ {"role": "user", "content": "What is function calling?"}, {"role": "assistant", "content": "Function calling allows AI models..."}, {"role": "user", "content": "Show campaign performance for last month"} ], |

| 8 | ChatGPT suggests calling get_campaign_performance with appropriate date parameters | {"role": "assistant", "content": null, "tool_calls": [{"id": "call_abc123", "type": "function", "function": {"name": "get_campaign_performance", "arguments": "{"start_date": "2025-09-01", "end_date": "2025-09-30"}"}}]} |

| 9 | OpenWebUI executes get_campaign_performance with the suggested date parameters and receives the campaign data. | UI shows intermediate state indicating function execution in progress. Calls function get_campaign_performance( start_date="2025-09-01", end_date="2025-09-30") Receives campaign details such as: {"campaigns": [ {"name": "Fall Sale 2025", "impressions": 45000, "clicks": 1200, "cost": 850.50, "conversions": 45},

]} |

| 10 | OpenWebUI sends a new API request to ChatGPT that includes the full conversation so far — the user’s messages, the assistant’s tool-call suggestion, and the tool’s execution result (as a role: "tool" message). | {"messages": [ ...previous messages..., {"role": "assistant", "content": null, "tool_calls": [{"id": "call_abc123", "type": "function", "function": {"name": "get_campaign_performance", "arguments": "{...}"}}]}, {"role": "tool", "tool_call_id": "call_abc123", "name": "get_campaign_performance", "content": "{"campaigns": [ {"name": "Fall Sale 2025", "impressions": 45000, "clicks": 1200, "cost": 850.50, "conversions": 45}, {"name": "Brand Awareness", "impressions": 120000, "clicks": 3500, "cost": 2100.00, "conversions": 120}]},... ]}} ], |

| 11 | ChatGPT generates final response. | {"role": "assistant", "content": "Here's your campaign performance for September 2025: **Fall Sale 2025** - Impressions: 45,000 - Clicks: 1,200 (2.67% CTR) - Cost: $850.50 - Conversions: 45 (3.75% conversion rate) - Cost per conversion: $18.90 **Brand Awareness** - Impressions: 120,000 - Clicks: 3,500 (2.92% CTR) - Cost: $2,100.00 - Conversions: 120 (3.43% conversion rate) - Cost per conversion: $17.50 The Brand Awareness campaign shows better efficiency with a lower cost per conversion."} |

| 12 | OpenWebUI displays final response | Here's your campaign performance for September 2025: Fall Sale 2025

Brand Awareness

The Brand Awareness campaign shows better efficiency with a lower cost per conversion. |

With MCP

If OpenWebUI uses Google’s MCP server, Step 0 changes entirely:

The engineering team would not need to create functions as in the Step 0 of the above table. Instead the step 0 will replaced with the step 0 in the table given below.

| Step | Action | Notes |

| 0 | Engineering team configures MCP client in OpenWebUI using Google Ads MCP manifest | MCP client typically validates the manifest (schema check, endpoint reachability, etc.) but does not yet request live tool metadata from the server |

| 1 | User starts chat session | MCP client actually connects to the MCP server using JSON-RPC over WebSocket or HTTP, and receives the following list of tools (names, descriptions, property schemas) from MCP server: execute_gaql_query, get_campaign_performance, get_ad_performance |

| Steps 2 to 8 remain the same | ||

| 9 | OpenWebUI receives response from ChatGPT and follows its suggestion. It calls the MCP tool via its MCP | {"method": "tools/call", "params": {"name": "get_campaign_performance", "arguments": {"start_date": "2025-09-01", "end_date": "2025-09-30"}}, "id": 1} |

| 10 | MCP server processes request and return results to OpenWebUI | Sends campaign details such as: {"campaigns": [ {"name": "Fall Sale 2025", "impressions": 45000, "clicks": 1200, "cost": 850.50, "conversions": 45},

]} |

| Rest remains the same | ||

Adaptation for ChatGPT Custom GPTs

If using ChatGPT's chat interface with custom GPTs instead of OpenWebUI, the core flow remains the same but the function execution mechanism differs significantly:

- Step 1 would involve defining "Actions" (OpenAI's term for external API integrations) in the custom GPT configuration using OpenAPI schema, rather than loading internal plugins. These Actions point to external API endpoints that you host or subscribe to.

- Step 10 changes fundamentally: Instead of executing functions internally, ChatGPT makes an HTTP POST request to your external API endpoint (e.g., https://your-api.example.com/campaign-performance). The request includes the function arguments as JSON in the request body, along with any required authentication headers (API keys, OAuth tokens).

- Step 11 would involve your external API server receiving the HTTP request, authenticating, querying the Google Ads API, processing the data, and returning an HTTP response with the campaign data in JSON format.

The key architectural difference: OpenWebUI executes functions in its own runtime environment (internal plugins), while custom GPTs delegate function execution to external API services via HTTP. Despite this difference, the conversation flow, message array structure, and ChatGPT's role in determining when to call functions and synthesizing responses remains identical. This same pattern applies to other platforms like Anthropic's Claude with tool use, Microsoft Copilot Studio, or any LLM controller supporting function calling.

Why Offer an MCP Server to Your Customers?

Providing your own MCP server — instead of letting customers build functions using your APIs — drastically lowers technical barrier for your customers, improves integration efficiency and user experience.

| Aspect | MCP Approach | Function Calling Approach | Winner & Why |

| Initial Setup Time | Customer adds your MCP server URL, configures credentials once, and starts using tools immediately. | Customer must study your APIs, code functions, test, debug, and maintain them. | ✅ MCP - 95% faster integration. |

| Technical Skills Needed | Your customer needs minimal technical knowledge as they only need to edit configuration files. | Your customer need to have software development expertise or have access to the engineering team to build functions. | ✅ MCP - Democratizes access for your non-technical customers. They don't need to be developers or have the access to an engineering team. |

| Code Responsibility | You develop, host and manage common workflows as tools. | Every customer has to code, host and manage functions separately. | ✅ MCP - You manage tools centrally making it easier for your customers. |

| Feature Updates | Customers automatically get the latest features and fixes. | Customers must manually update their code as your APIs evolve. | ✅ MCP - Zero burden on your customers to upgrade. |

| Cross-Platform Reusability | Works across all LLM controllers supporting MCP with zero change | Each customer must rewrite functions per LLM controller platform. | ✅ MCP - True "write once, run anywhere" for AI integrations. |

| Total Cost of Ownership | No developer or infrastructure cost for customers. | Customers bear development, hosting, and maintenance costs. | ✅ MCP - Dramatically lowers TCO. |

| Customization Flexibility | Limited to your provided tools. | Customers have full control to extend logic. | ✅ Function Calling - Better for power users needing custom workflows. |

From a service-provider perspective:

- MCP servers make it dramatically easier for customers to use your services without requiring engineering resources.

- Customers save cost and effort, and are more likely to rely on your managed tools long-term.

- MCP doesn’t replace your APIs — advanced users will still build on your raw endpoints for custom workflows.

- You’ll incur extra cost maintaining both APIs and the higher-level workflows your MCP server exposes.

In short, Function Calling gives developers maximum flexibility, while MCP delivers scalable, frictionless integration for the broader market. The two approaches complement each other — not compete.

Empowering Non-Technical Teams Through MCP

One of the most powerful outcomes of adopting MCP is how it democratizes access to AI-driven automation and data workflows.

In a typical organization today, tasks like querying analytics data, checking campaign performance, or triggering system actions require help from engineers or data teams. With MCP:

- Business, marketing, and operations staff can interact with AI tools directly — no coding, SDKs, or API knowledge needed.

- Tools are predefined, validated, and centrally managed by technical teams, so non-technical users can safely use them via natural-language prompts.

- Each department (marketing, sales, HR, finance) can have its own MCP servers exposing relevant workflows — like get_campaign_metrics, fetch_invoice_status, or generate_hiring_summary.

- Because MCP tools are discoverable at runtime, employees can explore capabilities dynamically instead of depending on documentation or custom dashboards.

This model effectively turns AI chat interfaces (like ChatGPT, Claude Desktop, or OpenWebUI) into functional copilots for every role — built on a controlled, maintainable backend infrastructure.

From a business perspective:

- It cuts down support and ticket volume to engineering.

- Speeds up decision-making.

- Keeps compliance and data access under centralized governance.

Limitations and Challenges of MCP

- Ecosystem Maturity

MCP is still very new (originating from Anthropic). Only a few LLM controllers currently support it natively. Most integrations are experimental or rely on unofficial client libraries. - Implementation Complexity

Hosting an MCP server isn’t trivial — you have to build a compliant JSON-RPC API layer, handle schema validation, manage authentication, and keep everything performant under real-time chat loads. - Security & Access Control

Because MCP servers expose powerful tools directly to models, misconfigured endpoints can leak or damage data. Authentication, rate limiting, and scoped permissions must be airtight. - Performance Overhead

The extra layer of indirection (client ↔ server ↔ model) can add latency. For high-frequency tool calls, this can become noticeable, especially over WebSocket or HTTP when many tools are being registered dynamically. - Limited Standardization Across Vendors

While MCP defines the protocol, each vendor interprets it slightly differently. Tool discovery, auth schemes, and schema conventions aren’t always 100% compatible between OpenAI, Anthropic, or local controller ecosystems. - Debugging & Observability Gaps

Unlike traditional function-calling setups where you control both ends, MCP often spans multiple systems. That makes debugging harder — logs, tracing, and error visibility can be fragmented across the LLM controller, MCP client, and the tool server.

About the Author

Dr. Rohit Aggarwal is a professor, AI researcher and practitioner. His research focuses on two complementary themes: how AI can augment human decision-making by improving learning, skill development, and productivity, and how humans can augment AI by embedding tacit knowledge and contextual insight to make systems more transparent, explainable, and aligned with human preferences. He has done AI consulting for many startups, SMEs and public listed companies. He has helped many companies integrate AI-based workflow automations across functional units, and developed conversational AI interfaces that enable users to interact with systems through natural dialogue.

You may also like