Table of Content

1. The AI User 🎯: The Source of Ground Truth

2. The AI Manager / Conductor 🎼: The Strategic Orchestrator

3. The AI Engineer / Builder 🛠️: The Technical Implementer

Go Deeper: A Comprehensive Role Breakdown

Conclusion: AI is a Team Sport

About the Author

What AI Skills Do Engineers, Managers, and Users Actually Need?

Cut through the AI hype with a practical framework that defines who does what—so your teams can build, manage, and apply AI systems that actually deliver value.

The mandate has come down from the top: "We need to be an AI-first company." Instantly, a wave of uncertainty sweeps across the organization. Managers scramble to hire "AI talent," while employees wonder which online course will make them relevant. Does the finance team need to learn Python? Should customer service leads be fine-tuning large language models?

This is the central problem facing businesses today: a profound lack of clarity on who needs what kind of AI skills.

This article provides a blueprint to cut through the confusion. We will move beyond the hype and define the three distinct, essential roles that make AI work in the real world: the AI User, the AI Manager/Conductor, and the AI Engineer/Builder. By understanding the specific contributions of each, you can finally bring clarity to your AI strategy, empower your entire workforce, and build the collaborative engine needed for sustained innovation.True success with AI doesn't come from hiring a handful of technical geniuses. It comes from building a well-orchestrated team with a diverse, complementary set of skills.

1. The AI User 🎯: The Source of Ground Truth

The AI User role is filled by the domain experts and end-users who provide essential real-world validation and feedback. They are the ultimate source of ground truth, ensuring that the AI solution is practical, relevant, and effective in the context of their daily work.

Key Responsibilities:

- Domain Expertise: Share tacit knowledge, explain nuanced decision-making processes, and validate AI outputs against real-world scenarios.

- Testing & Feedback: Participate in pilot testing, report edge cases and system failures, and provide ongoing performance feedback during actual usage.

- Requirements Validation: Ensure proposed solutions align with actual work needs and validate that technical implementations meet workflow requirements.

- Quality Assurance: Grade AI outputs, test different prompt variations, and identify when outputs fall short in practice.

2. The AI Manager / Conductor 🎼: The Strategic Orchestrator

AI Managers, or "Conductors," are the strategic orchestrators who bridge business needs with technical implementation. They translate organizational goals into a coherent AI strategy and ensure that all parts of the team are working in harmony toward a shared, value-driven objective.

Key Responsibilities:

- Strategic Direction: Define AI scope, boundaries, and success criteria in business terms; align AI behavior with organizational goals.

- Architecture Guidance: Make high-level design decisions, determine escalation points, and specify business requirements for AI capabilities.

- Knowledge Translation: Lead tacit knowledge extraction from users and translate expert insights into structured guidance for builders.

- Evaluation Design: Create comprehensive evaluation frameworks, define rubrics aligned with organizational standards, and prioritize improvements based on business impact.

- Cross-functional Coordination: Aggregate feedback streams, coordinate between users and builders, and manage the continuous improvement process.

3. The AI Engineer / Builder 🛠️: The Technical Implementer

The AI Engineers, or "Builders," are the technical implementers who build, deploy, and maintain AI systems. They are the hands-on creators responsible for turning strategic plans and architectural designs into robust, scalable, and reliable software.

Key Responsibilities:

- System Implementation: Design and code AI capabilities, control flows, data pipelines, and integration with existing systems.

- Technical Architecture: Implement monitoring, guardrails, safety measures, and robust execution frameworks with proper error handling.

- Performance Optimization: Build evaluation systems, implement A/B testing, optimize for cost/latency/accuracy, and maintain CI/CD pipelines.

- Infrastructure Development: Create data collection systems, prompt engineering frameworks, and automated feedback incorporation mechanisms.

Continuous Deployment: Implement updates, monitor performance, provide technical root-cause analysis, and maintain system reliability.

The Collaborative Dynamic: A Continuous Feedback Loop

These roles do not operate in isolation. Their power comes from their interaction in a continuous feedback loop:

The three archetypes work in a continuous feedback loop: Users provide domain expertise and real-world validation, Conductors translate business needs into strategic direction, and Builders implement technical solutions that get validated by Users and refined based on Conductor guidance. This iterative cycle is the engine of successful AI development, ensuring that technology remains firmly anchored to business value and human experience.

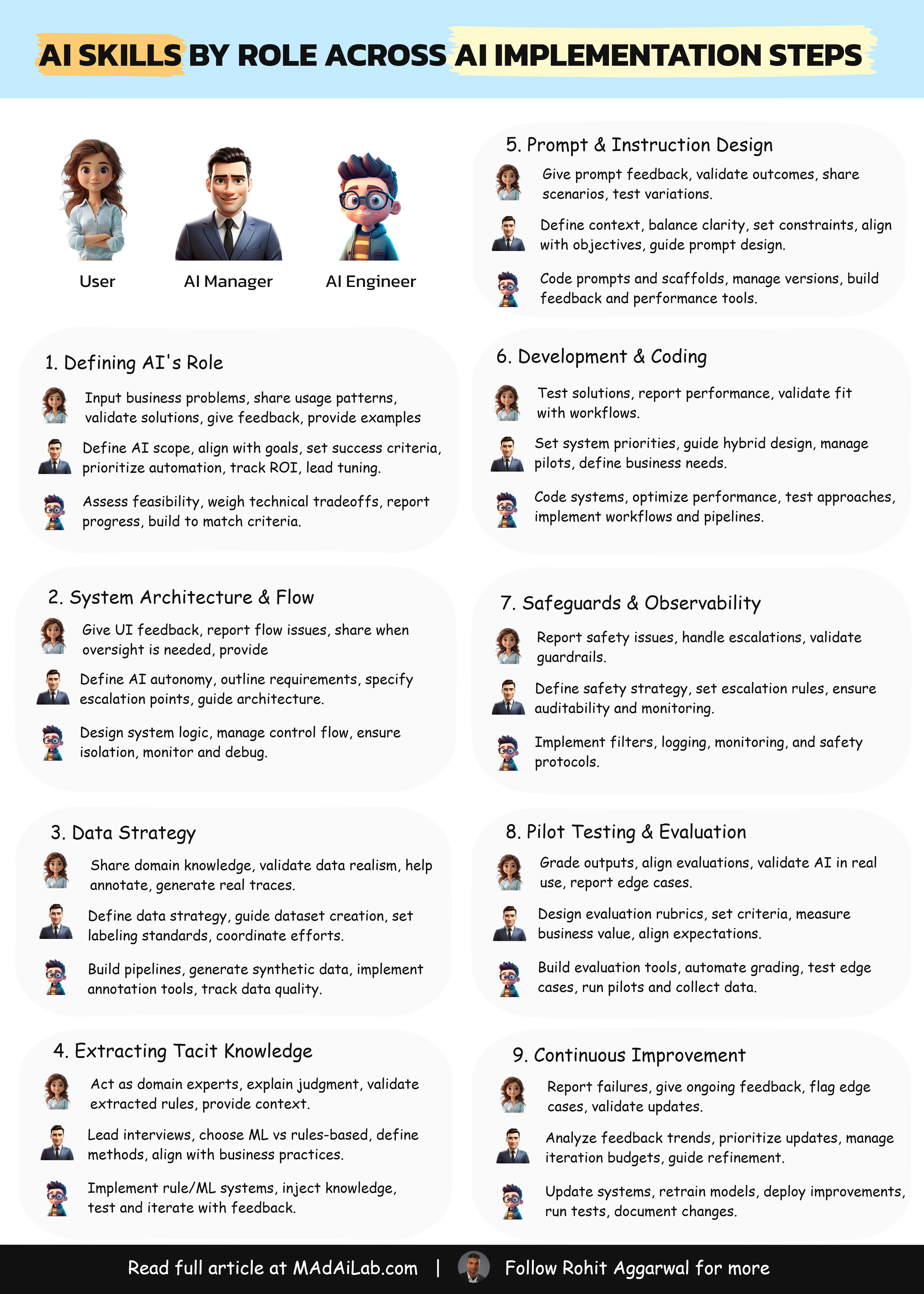

Go Deeper: A Comprehensive Role Breakdown

Want a more detailed look at how these roles collaborate? We've created a comprehensive chart that breaks down the specific responsibilities for Builders, Conductors, and Users across 9 critical dimensions—from Data Strategy to Safeguards.

| Dimension | AI Users | AI Managers / Conductors | AI Engineers / Builders |

| 1. AI Role in Workflow, Scope Definition, Success Criteria | Provide input on business problems and expected outcomes when prompted • Share real-world usage patterns and pain points • Validate that proposed solutions align with actual work needs • Give feedback on success criteria relevance to daily tasks • Supply real‑world examples of the problem to clarify pain points | • Define AI's role, scope, and boundaries based on business understanding • Align AI behavior with business goals and reduce ambiguity • Set success criteria in measurable business terms rather than technical metrics • Identify high-impact areas where AI can accelerate decisions or reduce manual effort • Make cost-conscious design decisions on what outputs add value • Prioritize between intelligent automation vs rule-based solutions • Track ROI and evaluate if AI achieves intended goals • Lead continuous collaboration for tuning systems to evolving business needs | • Collaborate with Conductors on technical feasibility of scope and boundaries • Provide input on cost implications of different technical approaches • Assess technical tradeoffs for various implementation options • Implement progress reporting and clarification mechanisms in code • Build systems that support the success criteria defined by Conductors |

| 2. System Architecture, Control Flow & Tooling | • Provide feedback on user interface and interaction patterns when using the system • Report issues with AI responsiveness, confusion or escalation processes • Share insights on when human oversight feels necessary in their workflow • Experience the workflow and trigger human‑escalation paths, providing feedback on flow breakpoints | • Define when AI should act autonomously vs escalate to human supervision • Collaborate with Builders on high-level architecture decisions • Specify business requirements for AI capabilities and interaction patterns • Determine escalation and hand-off points based on business risk • Define scope of AI interactions with APIs and systems • Guide decomposition of complex processes into manageable components • Ensure monitoring / auditing hooks implemented by Builders meet formal governance needs | • Design AI capabilities, memory, tools, and interaction patterns systematically • Implement well-scoped architecture for inputs, outputs, and system coordination • Structure control flow using finite-state logic, timeouts, and hand-off points • Design sandboxed execution with rate limits, API boundaries, and isolation • Prevent cascading failures and abuse during autonomous operation • Implement monitoring pipelines and tooling for debugging and auditing • Code systematic experimentation with different execution sequences |

| 3. Data Strategy: Collection, Annotation & Synthesis | • Provide domain expertise on what constitutes representative real-world scenarios • Identify edge cases and failure points from their daily experience • Validate synthetic data realism against actual work scenarios • Participate in annotation of ambiguous cases when guided by Conductors • Generate real‑world traces by using the system | • Plan overall data strategy and identify what data truly matters • Ensure data is representative and captures full range of business scenarios and edge cases • Co‑ordinate between Users and Builders for various purposes including data gathering, processing and annotation. • Guide synthetic dataset creation using domain expertise • Define data-level success criteria and labeling guidelines | • Implement data collection and processing systems • Build synthetic dataset generation based on Conductor guidance • Code annotation tools and workflows • Implement data quality tracking and correction mechanisms • Build systems to stress-test models using synthetic examples • Create data pipelines that support representative and exhaustive datasets • Share metrics & data issues with Conductors for decision‑making |

| 4. Tacit Knowledge Extraction using ML/Rule-based | • Act as domain experts—answer structured interviews, walk through tacit decisions, review extracted rules • Share intuitive knowledge and habitual decisions through guided questioning • Explain nuanced judgment processes they use in their work • Validate extracted knowledge against their real-world experience • Provide context on organization-specific practices and exceptions | • Lead tacit knowledge extraction through users' structured interviews • Translate expert insights into structured guidance for systems • Choose between rule-based and ML approaches for knowledge capture • Collaborate with Builders on identifying data and planning ML analysis if needed for knowledge extraction • Define external guidance methods to handle organization-specific information • Ensure extracted knowledge aligns with business practices and constraints | • Implement rule-based systems based on articulated organizational practices • Experiment various ML models on identified data and build ML pipeline for tacit knowledge extraction • Code knowledge injection methods for context not in training data • Evaluate performance on dedicated tacit‑knowledge test suites and iterate with Conductors & Users on gaps |

| 5. Prompt & Instruction Engineering | • Provide feedback on prompt effectiveness based on actual usage • Validate that instructions produce expected results in real scenarios • Share examples of nuanced or rare scenarios they encounter • Provide their workflow examples as per Conductors' requests • Test different prompt variations and report on quality differences • Use prompts provided by Conductors for effective AI usage | • Determine context requirements - what background information is necessary • Balance specificity and conciseness based on business needs • Separate must-have constraints from nice-to-haves • Define measurable definitions to replace ambiguous terms • Align context with business objectives and confidentiality requirements • Guide prompt structure for complex reasoning scenarios • Gather few shot examples from Users for prompts • Collaborate with Builders on experimenting with | • Implement prompt logic and instructions in structured input-output behavior • Code prompt templates, dynamic context injectors, reasoning scaffolds • Implement reasoning guidance for complex scenarios requiring thought processes • Code task interdependence logic for combined or split instructions • Implement framework for managing prompt versions • Build pipelines for tracking prompt performance across various models • Build interfaces for Users to provide feedback on prompts • Optimize prompt engineering for performance and accuracy |

| 6. Development / Coding | • Test coded solutions in real-world scenarios and provide feedback • Report on system performance during actual usage • Validate that technical implementation meets their workflow needs | • Collaborate with Builders on technical approach assessment • Define business requirements for system performance and accuracy • Set priorities for optimization around resources needed, cost, and latency based on business impact • Guide hybrid workflow design (AI + rule-based + APIs) from business perspective • Prioritize feature backlog & experimentation roadmap • Co‑ordinate pilot roll‑outs with Users (timing, comms, opt‑in/opt‑out) | • Implement all technical aspects of data processing and AI integration • Manage pipeline for training AI models and using them for inference • Code systematic testing of different approaches against metrics • Optimize for costs, processing time, accuracy and business alignment • Implement efficiency optimizations - reducing tool calls, reusing outputs • Code hybrid workflows coordinating AI, rule-based systems, and APIs • Translate architecture and control flow into executable code • Implement execution sequences with fallback and retry mechanisms • Maintain CI/CD pipelines and formal technical documentation |

| 7. Safeguards, Guardrails & Observability | • Report safety issues, harmful, off‑policy, and unexpected behaviors during usage • Handle scenarios that AI direct to users • Provide feedback and share their handling of scenarios, especially escalated ones, via provided interfaces • Validate guardrail effectiveness based on real-world system interaction | • Define defense-in-depth strategy requirements based on business risk • Set content-filter rules, thresholds and escalation procedures for human oversight • Determine what should be auditable and traceable for business purposes • Define guardrail activation triggers for uncertainty and violations • Specify monitoring requirements for business-critical functions • Review observability reports with Builders and explore alternate high-level design discussions if needed | • Implement hallucination detection, PII filters, content filters, and fallback policies • Code activation triggers for uncertainty and guardrail violations • Build traceability infrastructure with structured logging of all agent behavior • Implement guardrails for safety and misuse prevention • Develop robust monitoring systems to detect silent degradation • Create audit trails for reproducing and improving agent behavior |

| 8. Pilot Testing & Evaluation (LLM + Human) | • Participate in human grading of AI outputs and quality • Participate in calibration reviews to align evaluation pipelines with human preferences • Provide real-world validation of AI performance in actual work scenarios • Give feedback on evaluation criteria relevance to business needs • Test edge cases during pilot phases and report findings | • Design comprehensive evaluation frameworks assessing business value • Define evaluation rubrics that align with organizational standards • Determine appropriate scale and criteria for subjective qualities • Ensure pilot performance measurement against business objectives • Guide human-expectation alignment checks for organizational conformity • Work with Builders to create representative validation sets for AI evaluations | • Implement LLM graders with unambiguous scoring schemes • Code multi-faceted evaluation approaches combining various assessment methods • Build edge case testing systems and tool behavior evaluation • Implement monitoring pipelines and feedback loops for continuous improvement • Create validation systems to align model judgments with standards • Code evaluation infrastructure for technical performance indicators • Implement automated evaluators, metrics collectors, A/B testing of various AI approaches • Execute pilot runs, gather logs, compute scores |

| 9. Continuous Improvement & Feedback Loops | • Actively identify when outputs fall short in real-world usage • Provide ongoing feedback on system performance and areas for improvement • Report edge cases and unexpected behaviors encountered during regular use • Validate improvements against actual work requirements | • Aggregate feedback streams, analyze trends, reprioritize updates and guide targeted feedback and improvement strategies based on business impact • Schedule retraining or prompt revisions and lead continuous collaboration to keep AI aligned with evolving business goals • Guard budget & ROI during long-term iteration and prioritize improvement efforts based on business value and user impact • Guide Builders on next sprints and ensure improvements align with changing processes and constraints • Drive cross-functional feedback sessions that include Users and Builders and coordinate iterative refinement across all stakeholders | • Patch prompts, data, code; retrain models and implement monitoring pipelines for reliable deployment performance • Refine monitoring & alert thresholds and code iterative engineering approaches with rigorous testing and observation • Deploy updates and verify impact and build systems for prompt refinement in response to tool function feedback • Provide technical root-cause analyses to Conductors and implement feedback incorporation mechanisms for data, instructions, and design • Implement rapid A/B or canary tests and roll back deployments if regressions are detected • Document changes and publish release notes for Conductors & Users and create automated improvement suggestions based on performance data • Build systems that adapt based on real-world usage observations |

Conclusion: AI is a Team Sport

The narrative that building AI is solely the domain of hooded figures typing in a dark room is officially obsolete. Creating intelligent systems that deliver real, lasting business value is a fundamentally human and collaborative endeavor.

Organizations that succeed in the AI era will be the ones that stop searching for mythical, all-in-one "AI experts" and start intentionally cultivating the distinct, complementary skills of Builders, Conductors, and Users. By fostering a culture of deep respect and creating structured feedback loops between these three roles, you can move beyond the hype and build an organization that doesn't just use AI, but masters it.

As you look at your own organization, ask yourself: Have we only hired the Builders? Who is our Conductor? And are we truly listening to our Users?

#AIAdoption #AITransformation #AIUser #AIConductor #AIEngineer #OrgDesign #TeamAI #AITalent #AIXRoles #AIInPractice #CollaborationInAI #AILeadership

About the Author

Dr. Rohit Aggarwal is a professor, AI researcher and practitioner. His research focuses on two complementary themes: how AI can augment human decision-making by improving learning, skill development, and productivity, and how humans can augment AI by embedding tacit knowledge and contextual insight to make systems more transparent, explainable, and aligned with human preferences. He has done AI consulting for many startups, SMEs and public listed companies. He has helped many companies integrate AI-based workflow automations across functional units, and developed conversational AI interfaces that enable users to interact with systems through natural dialogue.

You may also like